Comprehensive Guide to GPT-4 API Limits: Everything You Need to Know

Introduction to GPT-4 API and Its Importance

Welcome to the ultimate guide on GPT-4 API limits. If you’re exploring the capabilities of GPT-4, understanding its limitations is crucial. In this guide, we will cover everything you need to know about GPT-4 API, including token, rate, message, and usage limits. This knowledge is essential to effectively utilize the technology and avoid common pitfalls.

Whether you are a developer, a researcher, or a business owner, navigating the constraints of GPT-4 can help you maximize its potential and maintain optimal performance. Let’s dive into the specifics!

[插图:GPT-4 API Interface]

Understanding Different Types of Limits

Token Limits

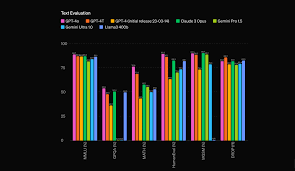

The token limit in GPT-4 refers to the maximum number of tokens you can send in a single request. A token can be as short as one character or as long as one word. For example, the token limit for gpt-4-turbo is different from that of gpt-4-standard. This limit ensures that the server can process data efficiently without being overwhelmed.

Typical token limits for GPT-4 models can range from 8,000 to 32,000, depending on the specific tier and model configuration you are using.

Rate Limits

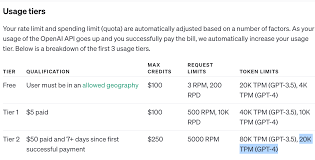

Rate limits are implemented to prevent abuse and ensure fair usage of the API. These limits are usually defined in terms of requests per minute (RPM) or tokens per minute (TPM). For instance, a GPT-4 model may have a rate limit of 40,000 TPM and 200 RPM, meaning you can send up to 40,000 tokens and make 200 requests in one minute.

Understanding your rate limits is essential for planning how you or your application will interact with the API, especially during peak usage times.

Message Limits

Message limits on the GPT-4 API refer to the number of messages you can send within a specific period. For example, during heavy usage, OpenAI might enforce a 25 messages every 3 hours limit to manage demand. Knowing these restrictions can help you plan your interactions without hitting unexpected barriers.

Usage Limits

Usage limits encompass an organization’s overall API usage, monitoring the total amount of tokens and requests over longer periods, like daily or monthly quotas. Depending on your subscription tier, these limits can vary significantly. Higher-level tiers may offer more generous limits compared to free or lower-level subscriptions.

Practical Tips for Managing GPT-4 API Limits

- Monitor Your Usage: Regularly check your usage statistics to ensure you’re within your limits.

- Optimize Requests: Split large requests into smaller, more manageable chunks to avoid reaching token limits.

- Retry Logic: Implement retry logic in your applications to handle occasional rate limit errors gracefully.

- Upgrade Your Plan: If you consistently hit your limits, consider upgrading to a higher-tier plan with more generous limits.

- Batch Processing: Group similar requests together when possible to reduce the number of individual calls to the API.

Frequently Asked Questions (FAQs)

What happens if I exceed the rate limit?

If you exceed the rate limit, the API will stop fulfilling further requests until enough time has passed. You’ll receive an error message indicating that the rate limit has been reached and need to wait before making more requests.

Can I increase my token limit?

You cannot increase the token limit per request. However, you can manage your requests to ensure they stay within the provided limits. Upgrading your subscription tier can also offer higher overall token limits.

Is there a way to bypass message limits?

No, you cannot bypass message limits. These are imposed to ensure fair usage and server stability. Planning your interactions and spreading them over time can help manage within these limits.

How do I check my current usage?

You can check your current usage through the OpenAI account management page, where you’ll find detailed statistics on your token and request usage.

What should I do if I hit my usage limits frequently?

If you frequently hit your usage limits, consider optimizing your API calls or upgrading to a higher-tier plan that offers more generous limits. Proper batching and monitoring can also help manage usage effectively.

Conclusion and Next Steps

In conclusion, understanding the various limits of the GPT-4 API is essential for effectively utilizing this powerful tool. By familiarizing yourself with token, rate, message, and usage limits, you can plan your interactions better and avoid interruptions.

Remember, managing your API usage wisely not only helps you adhere to the imposed limits but also ensures a smooth and efficient workflow. Regular monitoring and strategic planning can make a significant difference in leveraging GPT-4 to its full potential.

Looking to implement GPT-4 API in your next project? Start by evaluating your current needs and exploring OpenAI’s subscription tiers to find the best fit for your requirements. Happy coding!